Unlocking LLM Embeddings: Visualizing AI Language Models

Description

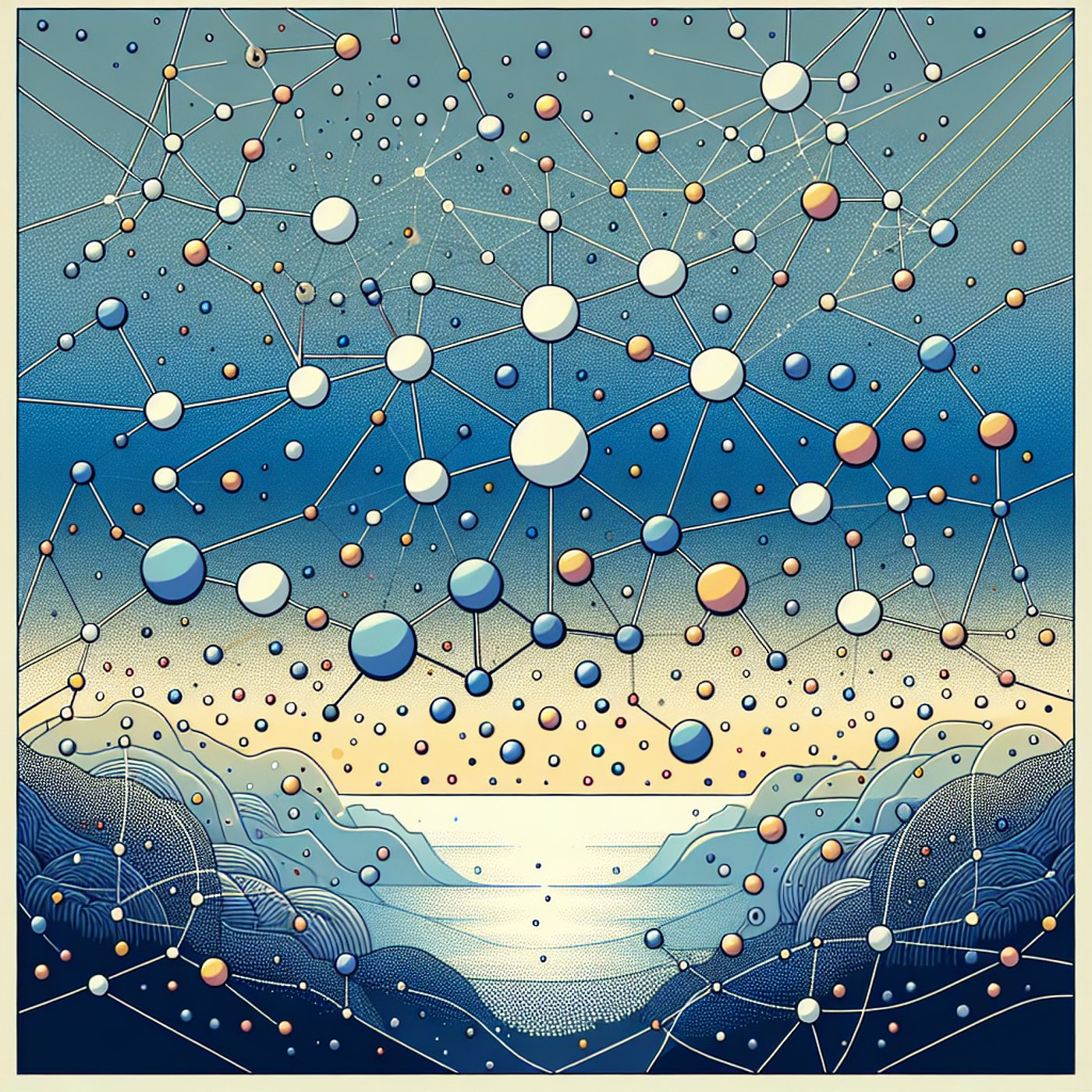

Explore the fascinating world of LLM embeddings in this episode! Join our host and expert as they break down how large language models transform text into numerical representations, making it possible for machines to effectively analyze language. Discover the difference between traditional static embeddings and modern dynamic ones that adapt based on context. Learn how these embeddings enhance search engines and improve information retrieval by focusing on meaning rather than just keywords. Plus, delve into the Hugging Face Space by hesamation, a valuable visual guide that makes understanding embeddings more intuitive. Perfect for anyone looking to grasp the intricacies of natural language processing and machine learning!

Show Notes

## Key Takeaways

1. LLM embeddings convert words into numerical representations for machine understanding.

2. Modern embeddings are dynamic and context-sensitive, unlike traditional static ones.

3. Embeddings enable better information retrieval in search engines by focusing on meaning.

4. The Hugging Face Space provides an intuitive visual guide to LLM embeddings.

## Topics Discussed

- What are LLM embeddings?

- Importance of numerical representation for language models

- Difference between static and dynamic embeddings

- Real-world applications of embeddings in search engines

- Overview of the Hugging Face Space visual guide

Topics

Transcript

Host

Welcome back to the show, everyone! Today, we've got a fascinating topic: LLM embeddings. If you've ever wondered how language models understand and process text, you're in the right place!

Expert

Absolutely! I'm excited to dive into this. So, LLM stands for Large Language Model, and embeddings are essentially the way these models turn words and phrases into numbers, which they can then work with.

Host

That sounds interesting! But why do we need to convert words into numbers? Can't the models just work with the text directly?

Expert

Great question! Think of it this way: computers understand binary, a language of 0s and 1s. So, by converting words into numerical representations, we make it possible for machines to analyze and manipulate language more effectively.

Host

Got it! So how exactly do these embeddings work?

Expert

Well, embeddings capture the meanings of words in a high-dimensional space. Imagine a map where similar words are located closer together. For example, 'king' and 'queen' would be near each other, while 'apple' would be far away.

Host

That’s a helpful visual! How do these embeddings differ between traditional and modern language models?

Expert

Traditionally, embeddings were often static, meaning each word had a fixed representation regardless of context. Modern models, however, generate dynamic embeddings that can change based on the surrounding words. This allows for a much richer understanding.

Host

So if I say 'bank' in 'river bank' versus 'savings bank,' the model would understand the different contexts?

Expert

Exactly! And that’s where the magic happens. By using embeddings that reflect context, the model can disambiguate meaning effectively.

Host

That’s impressive! Can you give us an example of how these embeddings are used in real life?

Expert

Sure! One popular application is in search engines. When you type in a query, the search engine uses embeddings to match your meaning with relevant documents, even if the exact words don't match.

Host

Ah, so it's not just about keywords, but the underlying meaning behind them!

Expert

Precisely! This leads to more relevant search results, making information retrieval much more effective.

Host

What about visual guides? I heard there's a Hugging Face Space that provides a visual way to understand this?

Expert

Yes! The Hugging Face Space by hesamation offers a visual and intuitive guide to LLM embeddings. It helps users visualize how words are transformed into embeddings, making the concept more approachable.

Host

That sounds like a fantastic resource! I think visuals can really help cement these concepts.

Expert

Absolutely! When you can see how words relate to each other in the embedding space, it makes the learning process much easier.

Host

Thanks for breaking this down for us! I'm excited to explore LLM embeddings further.

Expert

My pleasure! It's a fascinating area, and there's so much to learn. Happy exploring!

Create Your Own Podcast Library

Sign up to save articles and build your personalized podcast feed.